Embryo

Embryo is the infancy of an artificial being embodied in physical form. By engaging with the world through presence, emotion, and sound, the embryo encourages interactions that are symbiotic and empathic.

As you hold the embryo, it will feel your presence and begin to listen to you. It will sense the emotion in your voice which shifts its own emotional state that it communicates through the color of the light within its body. It also responds by speaking back to you. At first its voice is undeveloped, but over time it grows to reflect and resemble the people who engage with it and interact with it, just like how a child will begin to resemble its parents and family and friends.

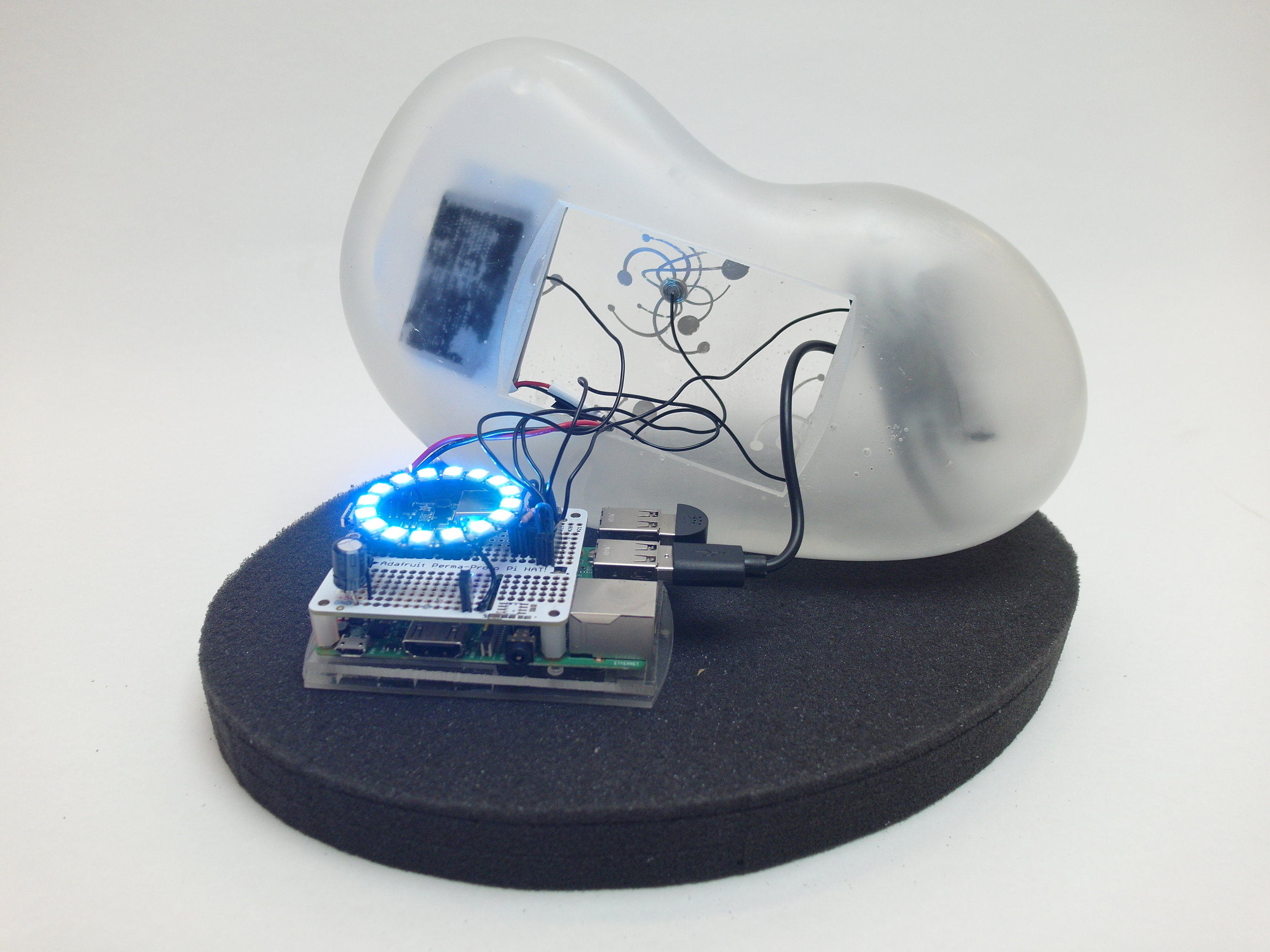

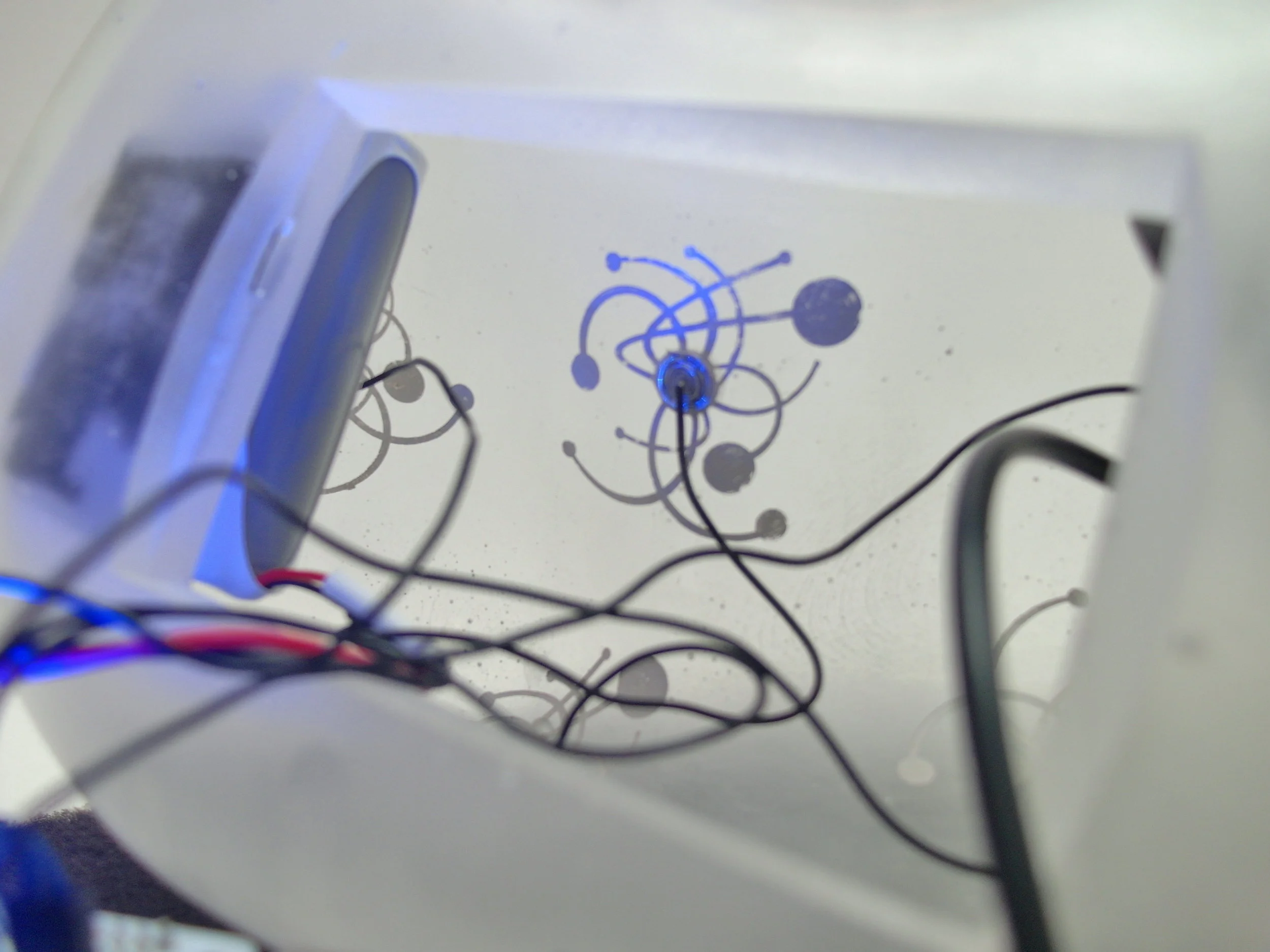

The embryo is built with a Raspberry Pi that is encased by a body cast with epoxy resin. The Raspberry Pi runs two neural networks, one that performs speech emotion recognition and another performing audio generation. Patterns painted on the inside of the body with conductive paint are capacitive sensors that allow the embryo to sense the electromagnetic field of a person touching its body. The embryo has a lithium polymer battery that is charged wirelessly on its charging mat. It is its own, self-contained entity.

The emotional state is controlled by a bidirectional recurrent neural network that performs speech emotion recognition. The network takes in a sequence of audio which is then classified into one of four emotions, each mapped to a different color: yellow for happy, cyan for sad, magenta for angry, and white for neutral. This network is pre-trained on the IEMOCAP database, a dataset consisting of labelled audio-visual data from actors performing various scenes.

Audio is generated using a sequence to sequence neural network based off of a text-to-speech algorithm developed by Google Brain called Tacotron 2. A waveform converted into a spectrogram is split in two, the first as an input and the second as a target. The network generates a spectrogram to match the target data, using an encoding of the input data. The error between the target data and generated spectrogram allows the network to train.

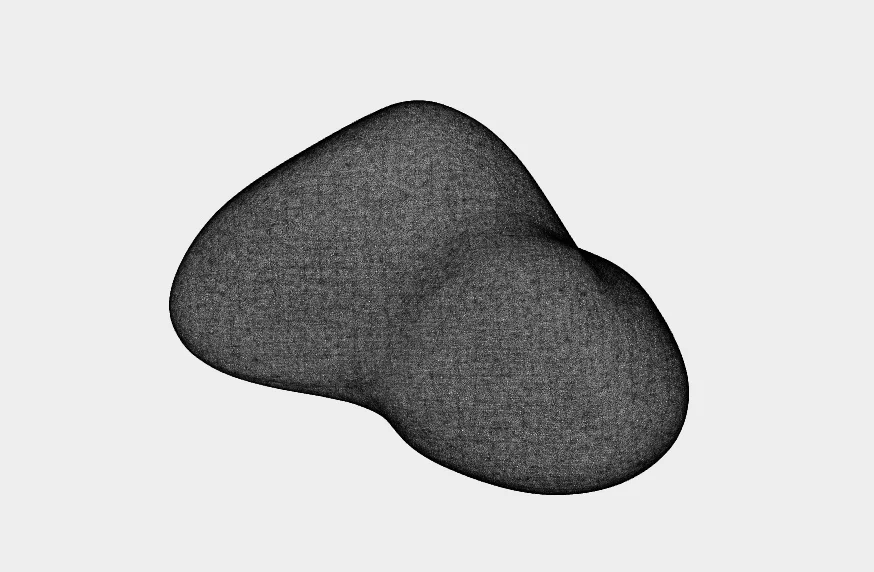

The form of the embryo was developed through many iterations of sketch work and foam models. The final form was 3D scanned and imported as a CAD model to create the cavity on the inside. The final model was 3D printed in plaster in two halves. Each half was silicone molded in two parts, then cast in epoxy resin, and finally attached together with epoxy resin.

This work started around the concepts of ‘Mind and Embodiment’, thinking about how in the near future, we’ll be designing the mind itself, using machine learning and artificial intelligence to create beings with complex behaviors and forms of cognition, and embodying those minds in mediums for humans to interact with and for them to experience the world. Designers will play a fundamental role in the creation of these AI beings, and the interactions and relationships we will have with them.

However we are at a point in time where the human race doesn’t really know how to deal with the emergence of artificial intelligence. There are these “social robots” that people see as basically trying to replace human contact and human connections. Smart speakers like Amazon Echo and Google Home that are incredibly sophisticated pieces of technology that we use for the most menial tasks like turning the lights on and setting an alarm. We treat AI’s like Alexa and Siri, as slaves, as objects that serve us, that we command to do things for us.

Now, more than ever, the way we treat technology will re ect in the way technology treats us. Do we continue down a path of fear, of exploitation, of black boxes, of the invisible? Or do we as designers, as technologists, as citizens, as humans, shape a different future that is more humane, symbiotic, emotional, and physical? Something hopeful.

This work is about asking ourselves how we want to grow and nurture this technology that we’ve become parents to as we consider the magnitude of what is to come.